OpenAI and Google release "activation atlases" for visualizing machine learning's internal thought process

(OpenAI)

OpenAI and Google have released a visualization tool they call activation atlases, which visualize the internal interactions among neural networks' individual neurons.

The results are map-like, psychedelic visualizations that show how a neural net can differentiate between different patterns, animal species, or similar objects.

OpenAI says the tool helps shed light into machine learning's unintelligible decision making.

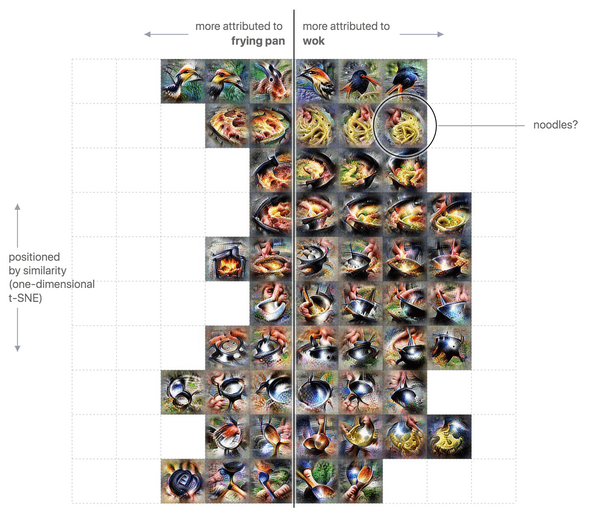

OpenAI also released a demo that allows users to explore atlases and discover how a neural network differentiates between a wok and a regular frying pan, for example.

From OpenAI's blog post:

For example, a special kind of activation atlas can be created to show how a network tells apart frying pans and woks. Many of the things we see are what one expects. Frying pans are more squarish, while woks are rounder and deeper. But it also seems like the model has learned that frying pans and woks can also be distinguished by food around them — in particular, wok is supported by the presence of noodles. Adding noodles to the corner of the image will fool the model 45% of the time!